Introduction: Automating the precision loop in orbit determination

For Guidance, Navigation, and Control (GNC) engineers, precision is mission-critical. Every orbit determination must maintain positional accuracy within tight margins, often as small as five meters. When managing a satellite, even a small deviation beyond that threshold can compromise mission objectives, data integrity, and overall system reliability.

Validating this level of precision across multiple simulation and flight datasets is complex. GNC engineers rely on six-degree-of-freedom (6DOF) simulations to model a satellite’s motion and verify that onboard estimation algorithms perform correctly. These simulations produce large volumes of telemetry data across many channels, each representing elements such as position, velocity, and attitude.

The challenge is to transform this data into actionable insight. Instead of manually combing through plots or spreadsheets, engineers use Sift to ingest, visualize, and automatically evaluate orbit determination performance. The result is a repeatable workflow that allows teams to verify positioning accuracy with confidence and speed.

The problem: hiding in plain sight

When simulation telemetry is ingested into Sift, the platform automatically parses the raw data and organizes it by Assets, such as the satellite, and their associated Channels, including true position and estimated position. This structure makes it easy for engineers to begin visualizing key parameters in Explore, where true and estimated position data can be plotted together.

At first, the plotted results in Explore often appear perfectly aligned. When the true position and estimated position Channels are displayed together, the traces seem identical, suggesting that the estimation algorithm is performing as expected. However, this view can be misleading. A five-meter discrepancy, which represents the exact threshold engineers need to validate, barely registers at that scale and is effectively invisible.

This challenge is common in orbit determination analysis. Tiny but critical deviations are hidden within large-scale telemetry ranges. Without a derived metric that isolates and quantifies the position error, the true performance of the estimation algorithm remains buried in the data.

Sift’s approach: creating the signal that is needed

To validate orbit determination performance, GNC engineers often need to create new telemetry signals that are not present in the original dataset. In Sift, this is done using Calculated Channels, which allow teams to define new derived metrics directly from existing telemetry data.

One common example is the position estimation error, which represents the difference between the satellite’s true and estimated position. Engineers can calculate this value using the Euclidean distance between the two sets of coordinates:

Once defined, this Calculated Channel can be saved to the satellite’s Asset, ensuring that the same calculation is automatically applied to all future simulations and test datasets. This approach promotes consistency, eliminates repetitive setup work, and standardizes how teams measure performance across missions.

By visualizing the position estimation error Channel in Explore, engineers can immediately see how estimation accuracy changes over time. What was once hidden in large-scale position data becomes a clear, continuous signal that highlights even the smallest deviations from expected performance.

Putting analysis on autopilot with Rules

Once engineers have defined the position estimation error, the next step is to automate how it is evaluated. Manually scanning through long simulations for threshold violations or sudden spikes can be slow and inconsistent. In Sift, this process can be automated using Rules.

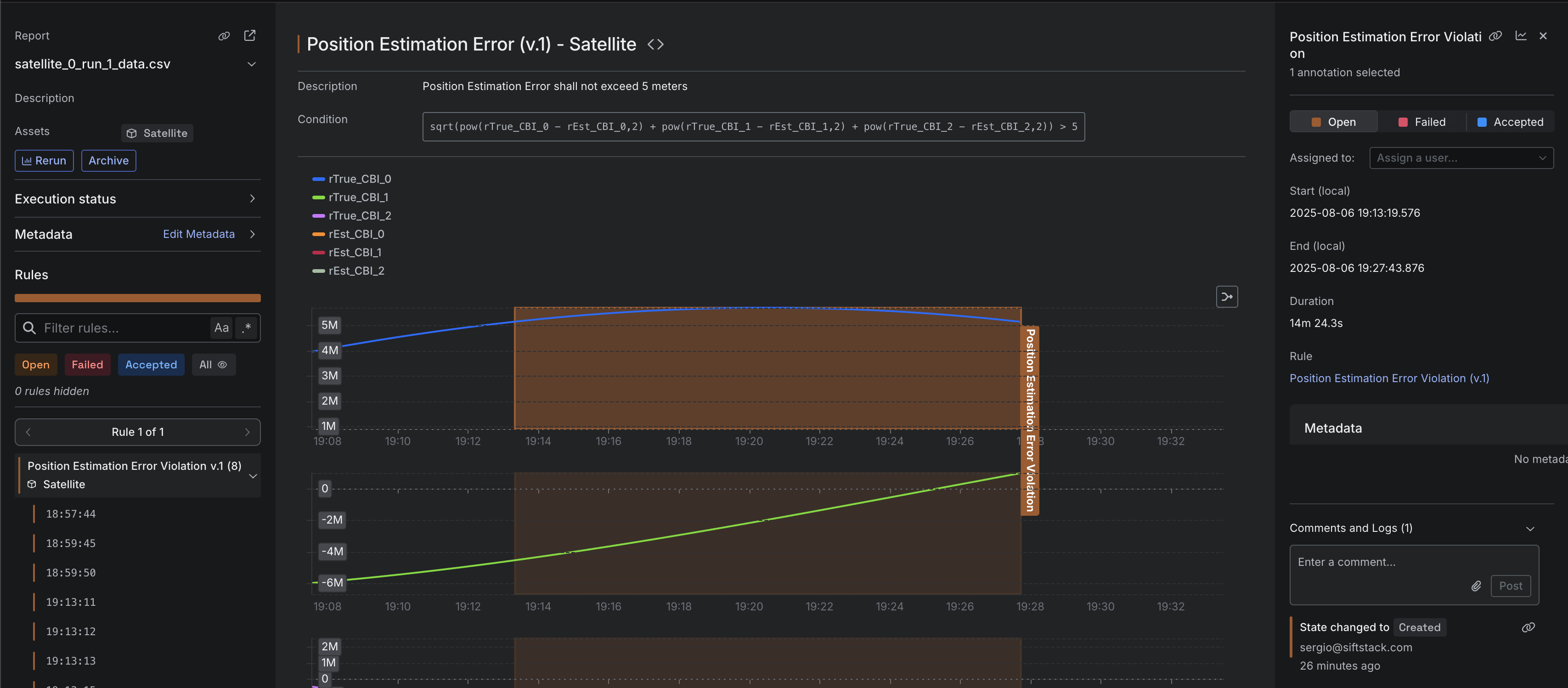

Rules allow engineers to define logical conditions that continuously check telemetry data for threshold breaches, anomalies, or unexpected states. For orbit determination, a simple example might involve creating a Rule that flags any instance where the position estimation error exceeds the required accuracy threshold:

After the Rule is defined, Sift automatically scans the dataset and generates Annotations for each instance that meets the condition. Every Annotation contains the necessary context, including the time window, related Channels, and any associated metadata. Engineers can then categorize each event as Accepted if it reflects expected behavior, such as a brief sensor dropout, or Failed if it points to an issue that requires deeper analysis, like a filter tuning error or a degraded sensor.

By automating these evaluations, teams can maintain consistent monitoring across every simulation and mission dataset. Rules transform raw telemetry into actionable insight, allowing engineers to focus on diagnosis and improvement rather than manual data review.

Building a reusable analysis toolkit in Sift

The workflow of creating calculated metrics and pairing them with automated Rules does more than validate a single orbit determination run. It establishes a repeatable framework that can be applied to nearly any aspect of satellite telemetry analysis.

Once these calculations and Rules are saved in Sift, teams can reuse them across multiple datasets, missions, or vehicles without redefining the logic each time. This consistency helps standardize how performance is measured and ensures that every analysis follows the same criteria. For example, engineers can extend the same approach to:

- Attitude control: Create a Calculated Channel to measure the difference between commanded and actual attitude, then set a Rule to flag deviations beyond the expected threshold.

- Sensor performance: Define Rules to identify readings that fall outside of operational ranges, helping teams detect sensor drift or degradation early.

By saving and reusing these Rules, engineers effectively build a shared library of analytical tools. This not only accelerates future analysis but also strengthens consistency and traceability across teams. With Sift, every new mission benefits from the knowledge and workflows established in the last.

.svg)